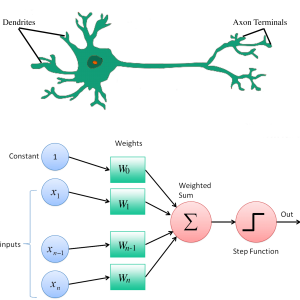

The concept of neural network and underlying perceptron is a mathematical representation of the biological form we call neurons and the intricate network they form. This article aims to draw similarities between the actual learning process (back propagation) and the human brain behavior at a high level to provide another perspective.

Perceptrons like neurons react based on the values of the input signal. The perceptron calculates the weighted sum of the input values and additionally applies an activation function to normalize the output as per the needs. Each layer of a multilayer perceptron uses the output of the previous layer. The learning process happens as the weights associated with the input values are adjusted as the neural network goes through the training process. This adjustment happens as there is a desire to reduce the loss between the prediction and the true target values provided as part of the training data.

This is where the concept of back propagation comes in. Back propagation is the key to neural networks learning and although the math involves differentials to calculate gradients; this learning process is similar to the human brain’s ability to arrive at the correct solution to many a problem when one sleeps over them. How many times have you gone to bed frustrated trying to solve something that in the moment appeared complex, and when you woke up in the morning you already had the solution figured out. During REM phase of sleep our brain pieces together as well as cleans up the information available to it resulting in better answers/ thoughts akin to improved predictions once the neural network has had a chance to adjust/ back propagate information based on the loss calculations during the forward pass.

Granted back propagation can happen at every input cycle but that may not be efficient. Imagine us going into a deep REM like phase every time we hit a road block. Mini-batches or in general batching process is how we gain efficiency in adjusting weights similar to brain processing plethora of information and adjusting its assessment of the said information.

Ultimately, a lot of efficiency in neural networks can be achieved by just paying additional attention to how our brains perceive, categorize and process information throughout the day.